Tools/Tests/Performance

Performance Tests

tests/performance is a framework for automated benchmarking of Blender features, with real-world .blend files. It's designed both for developers to use locally, and to run as part of continuous integration.

It's in early stages, and currently only used for benchmarking Cycles. However the framework is generic and intended to be used for more Blender features over time.

Setup

Unlike correctness tests, benchmarking involves multiple revisions or builds. For that reason, test configuration and results are located outside the build directory, in a benchmark directory next to the source code.

The following command creates this directory and a default configuration:

./benchmark.py init

The default configuration looks like this, and includes all CPU tests:

devices = ['CPU']

tests = ['*']

categories = ['*']

builds = {

'master': '/home/user/blender-git/build/bin/blender',

'2.93': '/home/user/blender-2.93/blender',

}

You can modify this configuration to point to existing Blender builds to compare, or set up automated building instead (see below).

Downloading tests

In the repository there are several tests that are used by modules for performance testing.

cd ~/blender-git

mkdir lib

cd lib

svn checkout https://svn.blender.org/svnroot/bf-blender/trunk/lib/benchmarks

Running Tests

To run the default configuration from the blender/tests/performance/ directory in the source code:

./benchmark.py run default

Timings will be printed to the console, and a results.html page with graphs will be generated.

For all available commands, see:

./benchmark.py help

When the git hash of a revision changes, or an executable is modified, results are marked as outdated. ./benchmark update will then automatically re-run only the tests for the modified builds or revision.

Configurations

A configuration is a subset of performance tests, devices and revisions. It can easily be run, re-run, analyzed and graphed. This is convenient when working on specific features or optimizations.

Configurations can be added by creating a new folder in the benchmark directory with a config.py file.

Example configuration for Cycles GPU rendering:

devices = ['OPTIX_*']

categories = ['cycles']

revisions = {

'master': 'master',

'cycles-x': 'cycles-x',

}

By default a bar chart is generated, useful when comparing a handful of revisions. For visualizing many commits over time, a line chart may be used instead.

benchmark_type = 'time_series'

Automated Building

The benchmarking tool can automatically build Blender. This is convenient if you want to quickly test multiple revisions, or want to avoid modifying your own build configuration for correct benchmarking. Ensuring your development build is configured in release mode without debugging aids can be tedious and error-prone.

The following commands sets up a dedicated git worktree and build in the benchmark directory.

./benchmark.py init --build

You can then specify any commit hashes, branches or tags recognized by git:

devices = ['CPU']

revisions = {

'new': 'my-feature-branch',

'old': 'main',

}

Future Plans

The main ideas for improvements are:

- Run tests multiple times and generate error intervals

- Run nightly as part of continuous integration

- Support benchmarking more Blender features

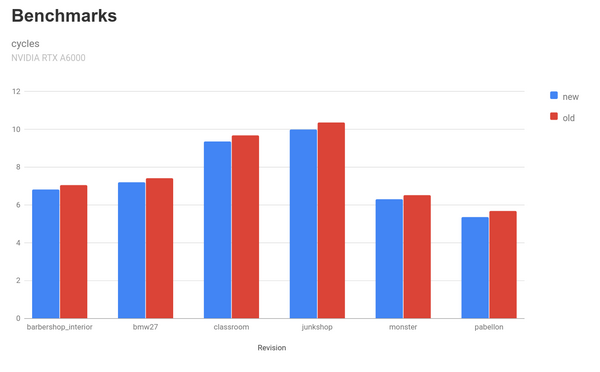

Example Output

# ./benchmark.py list

DEVICES

CPU AMD Ryzen Threadripper 2990WX 32-Core Processor (Linux)

CUDA_0 NVIDIA RTX A6000 (Linux)

OPTIX_0 NVIDIA RTX A6000 (Linux)

TESTS

cycles barbershop_interior

cycles bmw27

cycles monster

cycles junkshop

cycles classroom

cycles pabellon

CONFIGS

cycles-x

# ./benchmark.py run cycles-x

new old

barbershop_interior 6.8324s 7.0675s

bmw27 7.2180s 7.4321s

classroom 9.3709s 9.6966s

junkshop 10.0036s 10.3727s

monster 6.3217s 6.5380s

pabellon 5.3753s 5.6998s